LSTM

🗓 2017年12月18日 📁 文章归类: 0x24_NLP

版权声明:本文作者是郭飞。转载随意,标明原文链接即可。

原文链接:https://www.guofei.site/2017/12/18/lstm.html

介绍

是一种RNN算法,Schmidhuber于1997年提出

传统的RNN训练方法都有明显的缺点

- Gradient Descent和Back-Propagation Through Time(BPTT)

- Real-Time Recurrent Learning(RTRL)

LSTM

RNN的问题

- 文本内容可能在很远的地方有关联

- 梯度消失/梯度爆炸

时间间隔过长,这些算法都有梯度弥散问题

(还有一个GRU算法,可以看成是LSTM的简化版,就不另写了)

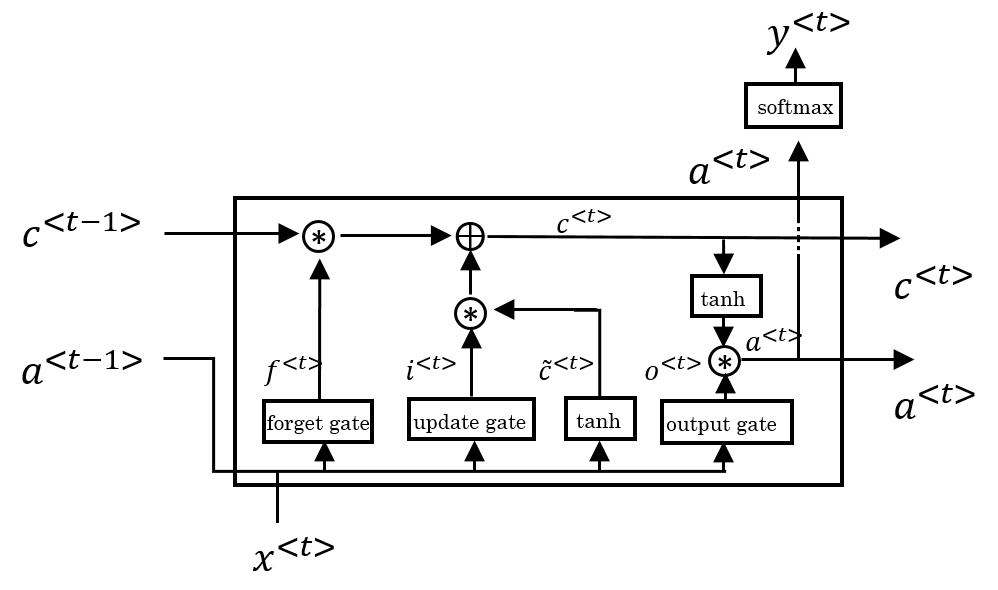

原理

- 本次可能保留到长程的信息 \(\tilde c^{<t>}=\tanh (W_c[a^{<t-1>},x^{<t>}]+b_c)\)

- update gate \(\Gamma_u =\sigma (W_u[a^{<t-1>},x^{<t>}]+b_u)\)

- forget gete \(\Gamma_f =\sigma (W_f[a^{<t-1>},x^{<t>}]+b_f)\)

- output gate \(\Gamma_o =\sigma (W_o[a^{<t-1>},x^{<t>}]+b_o)\)

- 传递要下一期的长程信息 \(c^{<t>}=\Gamma_u \bigotimes \tilde c^{<t>}+\Gamma_f \bigotimes c^{<t-1>}\)

- 本次短期信息 \(a^{<t>}=\Gamma_o \bigotimes \tanh c^{<t>}\)

- 输出 \(y^{<t>}=softmax(W_y a^{<t>}+b_y)\)

实现

step1:导入数据

import requests

url = 'https://www.guofei.site/datasets/cv/MNIST_data_csv.zip'

r = requests.get(url)

with open('MNIST_data_csv.zip', 'wb') as f:

f.write(r.content)

import zipfile

azip = zipfile.ZipFile('MNIST_data_csv.zip')

azip.extractall()

# %%

import pandas as pd

mnist_train_images = pd.read_csv('mnist_train_images.csv', header=None).values

mnist_test_images = pd.read_csv('mnist_test_images.csv', header=None).values

mnist_train_labels = pd.read_csv('mnist_train_labels.csv', header=None).values

mnist_test_labels = pd.read_csv('mnist_test_labels.csv', header=None).values

n_train=len(mnist_train_images)

#%%

step2:构建网络

import tensorflow as tf

import numpy as np

# %%

# 设置训练的超参数,学习率 训练迭代最大次数,输入数据的个数

batch_size = 128

# 神经网络参数

n_inputs = 28 # 输出层的n

n_steps = 28 # 长度

n_hidden = 128 # 隐藏层的神经元个数

n_classes = 10 # MNIST的分类类别 (0-9)

# 定义输出数据及其权重

# 输入数据的占位符

x = tf.placeholder("float", [None, n_steps, n_inputs])

y = tf.placeholder("float", [None, n_classes])

weight1 = tf.Variable(tf.random_normal([n_inputs, n_hidden]))

weight2 = tf.Variable(tf.random_normal([n_hidden, n_classes]))

bias1 = tf.Variable(tf.random_normal([n_hidden, ]))

bias2 = tf.Variable(tf.random_normal([n_classes, ]))

# 定义RNN模型

# 这个地方有点绕,思路是这样的:

# 每张图片是 28*28像素的,把每个图像看成 (28个有序向量*28维向量)

# RNN第一层的每次输入就是这个28维向量,所以才需要用两个reshape

x_in = tf.reshape(x, [-1, n_inputs]) # X_in = (128 batch * 28 steps, 28 inputs)

x_in = tf.matmul(x_in, weight1) + bias1

x_in = tf.reshape(x_in, [-1, n_steps, n_hidden]) # X_in = (128 batch, 28 steps, 128 hiddens)

lstm_cell = tf.nn.rnn_cell.LSTMCell(n_hidden, forget_bias=1.0, state_is_tuple=True)

init_state = lstm_cell.zero_state(batch_size, dtype=tf.float32)

outputs, final_state = tf.nn.dynamic_rnn(lstm_cell, x_in, initial_state=init_state, time_major=False)

pred = tf.matmul(final_state[1], weight2) + bias2 # pred = (128 batch * )

# 定义损失函数和优化器,采用AdamOptimizer优化器

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))

train_op = tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost)

# 定义模型预测结果及准确率计算方法

correct_pred = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

step3:训练

sess = tf.Session()

sess.run(tf.global_variables_initializer())

step = 0

# 训练,达到最大迭代次数

for i in range(300):

choice_indexes = np.random.choice(n_train, batch_size)

batch_xs, batch_ys = mnist_train_images[choice_indexes], mnist_train_labels[choice_indexes]

# Reshape data to get 28 seq of 28 elements

batch_xs = batch_xs.reshape((batch_size, n_steps, n_inputs))

sess.run(train_op, feed_dict={x: batch_xs, y: batch_ys})

if step % 20 == 0:

print(sess.run(accuracy, feed_dict={x: batch_xs, y: batch_ys}))

step += 1

参考文献

https://blog.csdn.net/zhaojc1995/article/details/80572098

《Matlab神经网络原理与实例精解》陈明,清华大学出版社

《神经网络43个案例》王小川,北京航空航天大学出版社

《人工神经网络原理》马锐,机械工业出版社

白话深度学习与TensorFlow,高扬,机械工业出版社

《TensorFlow实战》中国工信出版集团

您的支持将鼓励我继续创作!